Juanjo Valiño

Wrapfast

Shihab Mehboob

Bulletin

James Rochabrun

SwiftOpenAI

Sam McGarry

CueSecurity, observability and control.

Key security and DeviceCheck.

AIProxy uses a combination of split key encryption and DeviceCheck to prevent your key and endpoint from being stolen or abused.

Monitor usage on our dashboard.

Our dashboard helps you keep an eye on your usage and get a deeper understanding of how users are interacting with AI in your app.

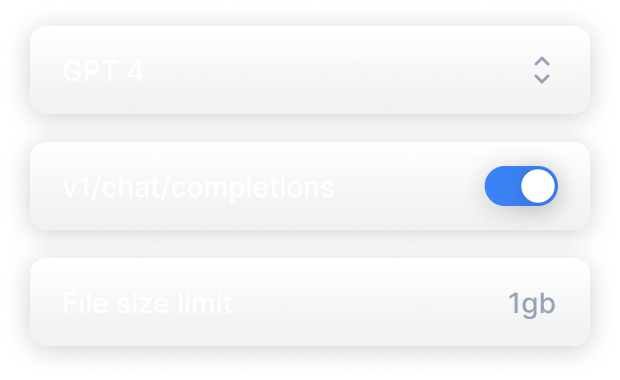

Hot swap models and proxy rules.

Wanna change from GPT 3.5 to 4? No problem! You can change models and parameters right from the dashboard without updating your app.

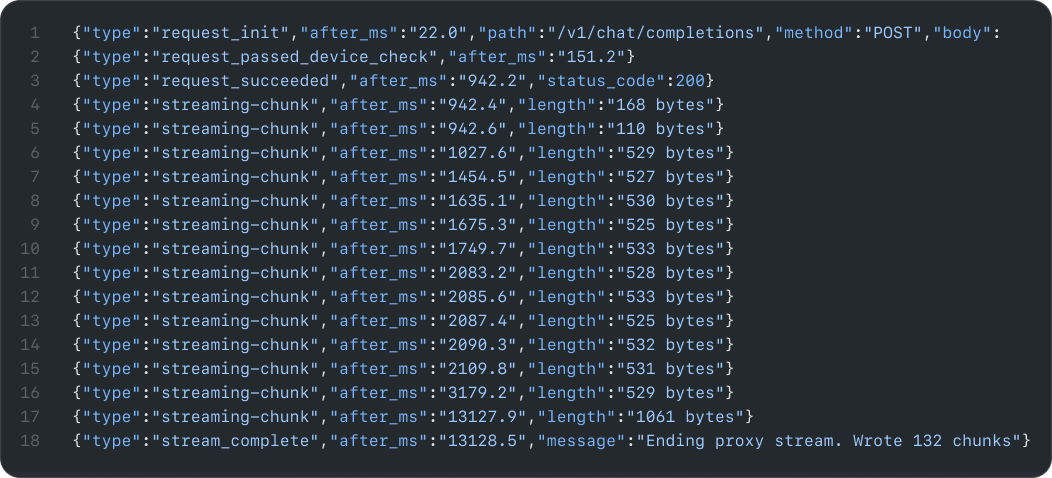

Test API calls with live console.

Use the live console to test you OpenAI calls from your app. Find errors and get a better understanding of performance.

Alerts to keep you alert.

Get alerts when there's suspicious activity so you can take quick action.

Built to scale.

Built on AWS, our service horizontally scales to meet demands.

Frequently Asked Questions

We don't actually store any customer OpenAI keys. Instead, we encrypt your key and store one part of that encrypted result in our database. On its own, this message can't be reversed into your secret key. The other part of the encrypted message is sent up with requests from your app. When the two pieces are married, we derive your secret key and fulfill the request to OpenAI.

We have multiple mechanisms in place to restrict endpoint abuse:

1. Your AIProxy project comes with proxy rules that you configure. You can enable

only endpoints that your app depends on in the proxy rules section. For example, if your app

depends on /v1/chat/completions, then you would permit the proxying of requests to that

endpoint and block all others. This makes your enpdoint less desireable to attackers.

2. We use Apple's DeviceCheck service to ensure that requests to AIProxy originated from your

app running on legitimate Apple hardware.

3. We guarantee that DeviceCheck tokens are only used once, which prevents an attacker from

replaying a token that they sniffed from the network.

The proxy is deployed on AWS Lambda, meaning we can effortlessly scale horizontally behind a load balancer.

Upon configuring your project in the developer dashboard, you'll receive initialization code to drop into the SwiftOpenAI client. Alternatively, you can use a bootstrap product like WrapFast.